Optimize Cloud Networks for Lightning-Fast Performance: Cloud Networking Latency Optimization

In the realm of cloud computing, latency reigns supreme. Cloud networking latency optimization emerges as the ultimate solution to minimize delays and maximize performance, propelling your applications and services to new heights of responsiveness.

Latency, the bane of cloud networks, can hinder user experience, cripple productivity, and tarnish your reputation. But fear not, for this comprehensive guide will equip you with the knowledge and techniques to conquer latency and unlock the full potential of your cloud infrastructure.

Cloud Networking Latency Optimization

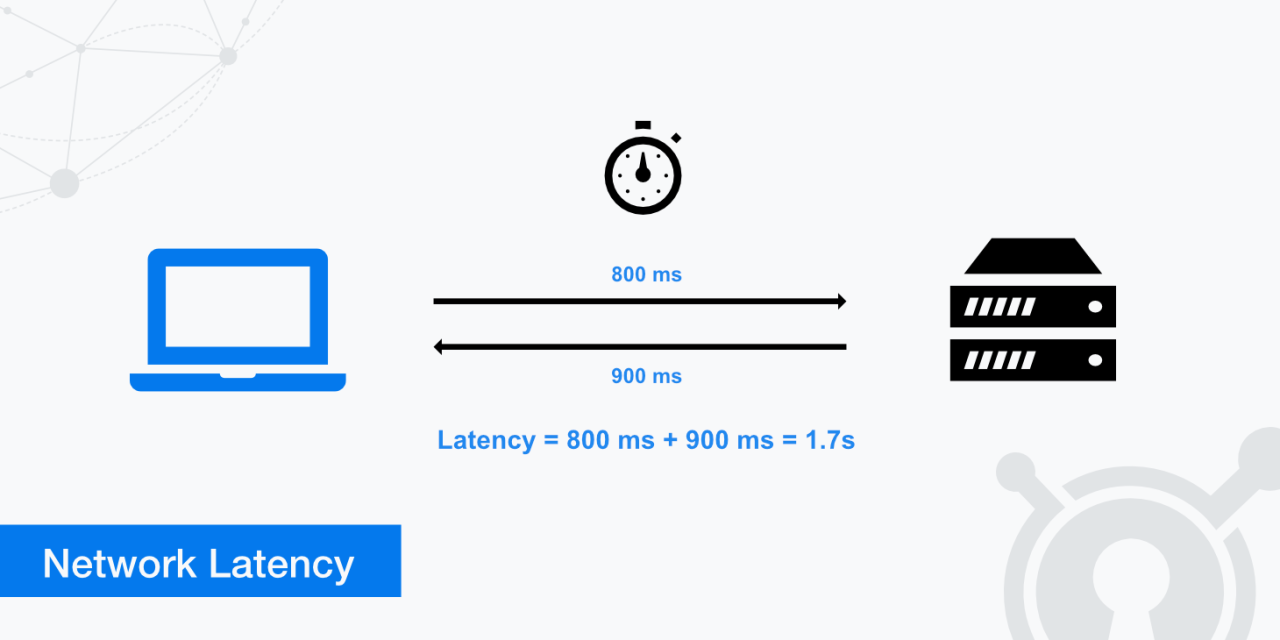

Latency optimization in cloud networking refers to the techniques and strategies employed to minimize the time it takes for data to travel across a cloud network. Optimizing latency is crucial for cloud-based applications and services, as it directly impacts user experience, application performance, and overall efficiency.

In cloud networks, latency can be influenced by various factors, including network architecture, traffic patterns, load balancing, and protocol choices. Addressing these factors and implementing effective optimization techniques can significantly reduce latency and improve the performance of cloud-based applications.

Challenges and Complexities

Optimizing latency in cloud networks presents several challenges, including:

- Network Complexity:Cloud networks are often complex, involving multiple interconnected components, such as virtual machines, containers, and network devices. Managing latency across such complex environments can be challenging.

- Dynamic Traffic Patterns:Cloud networks experience highly dynamic traffic patterns, with workloads and traffic flows constantly changing. Optimizing latency requires adapting to these dynamic conditions in real-time.

- Cross-Region Communication:Cloud applications often span multiple regions or zones, introducing additional latency due to geographical distances. Optimizing latency across cross-region communication is critical.

Importance of Latency Optimization

Optimizing latency in cloud networks is crucial for several reasons:

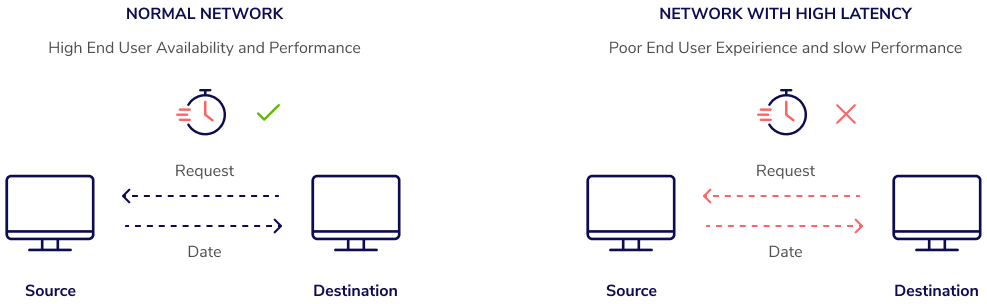

- Improved User Experience:Reduced latency leads to faster response times and improved user experience, particularly for interactive applications and real-time services.

- Enhanced Application Performance:Lower latency improves the performance of cloud-based applications, reducing delays and ensuring smooth operation.

- Increased Scalability:Optimized latency allows cloud networks to handle increased traffic and workload demands without compromising performance.

- Cost Optimization:Reducing latency can lead to cost savings by minimizing the need for additional infrastructure or overprovisioning of resources.

Techniques for Latency Reduction in Cloud Networks

Latency optimization in cloud networks involves implementing various techniques to minimize the time it takes for data to travel from source to destination. Here are some commonly used techniques:

One effective strategy is network segmentation, which divides a large network into smaller, isolated segments. This reduces the number of devices competing for bandwidth and improves overall network performance.

Traffic Prioritization

Prioritizing critical traffic ensures that essential data receives preferential treatment, reducing latency for applications that demand real-time responsiveness. This can be achieved through techniques like Quality of Service (QoS) or traffic shaping.

Content Delivery Networks (CDNs)

CDNs distribute content from geographically dispersed servers, reducing the distance data must travel to reach end-users. By caching frequently requested content closer to users, CDNs significantly improve latency and enhance user experience.

Virtual Private Networks (VPNs)

VPNs create secure, private connections over public networks. However, they can introduce additional latency due to encryption and tunneling overhead. To mitigate this, consider using high-performance VPN technologies or optimizing VPN configurations for latency reduction.

Cloud-Based Load Balancing

Load balancing distributes incoming traffic across multiple servers, preventing any single server from becoming overloaded and causing latency. Cloud-based load balancing services offer scalability and flexibility, allowing for automatic adjustment based on traffic patterns.

DNS Optimization

Optimizing DNS settings, such as using a content delivery network (CDN) for DNS resolution or implementing DNS caching, can reduce latency by minimizing the time it takes to resolve domain names into IP addresses.

Route Optimization

By optimizing routing protocols and implementing techniques like Border Gateway Protocol (BGP) peering, network engineers can improve the efficiency of data flow and reduce latency.

Hardware Acceleration

Utilizing hardware acceleration technologies, such as network interface cards (NICs) with specialized processing capabilities, can offload network processing tasks from the CPU, resulting in improved performance and reduced latency.

Cloud Network Architecture and Latency

Cloud network architecture plays a crucial role in determining latency. Factors like network topology, routing protocols, and load balancing significantly impact the time it takes for data to traverse the network. Understanding these factors and implementing best practices can help optimize cloud networks for low latency.

Network Topology

Network topology refers to the physical and logical arrangement of network components. A well-designed topology can minimize latency by reducing the number of hops between source and destination. Consider using a star or mesh topology, which provides multiple paths for data to flow, reducing the likelihood of congestion and delays.

Routing Protocols

Routing protocols determine how data is forwarded through the network. Choose routing protocols that prioritize latency, such as Equal-Cost Multi-Path (ECMP) or Border Gateway Protocol (BGP) with multipath capabilities. These protocols allow data to take the fastest available path, reducing latency.

Load Balancing

Load balancing distributes traffic across multiple servers or network devices. This helps prevent congestion and ensures optimal performance. Use load balancing algorithms that consider latency as a factor, such as least-latency or weighted least-latency algorithms. These algorithms route traffic to the server or device with the lowest latency, minimizing delays.

Cloud Service Provider (CSP) Capabilities

CSPs play a crucial role in optimizing latency by providing a range of services and features tailored to improve network performance. These services include:

- Virtual Private Networks (VPNs):CSPs offer VPN services that allow enterprises to create secure, private networks over the public internet, reducing latency by bypassing congested public networks.

- Content Delivery Networks (CDNs):CDNs cache content closer to end-users, reducing the distance data must travel and minimizing latency.

- Load Balancing:CSPs provide load balancing services that distribute traffic across multiple servers, ensuring optimal performance and reducing latency spikes.

li> Network Optimization Services:CSPs offer network optimization services that monitor and analyze network traffic, identifying and resolving bottlenecks that contribute to latency.

By leveraging these services, enterprises can significantly reduce latency and improve the overall performance of their cloud applications.

Benefits of Using CSPs for Latency Optimization

- Reduced Latency:CSPs provide a suite of services and features specifically designed to minimize latency, allowing enterprises to deliver a seamless user experience.

- Improved Performance:By optimizing latency, CSPs enhance the overall performance of cloud applications, resulting in faster response times and increased productivity.

- Cost Savings:CSPs offer cost-effective latency optimization solutions that can help enterprises reduce their infrastructure expenses.

- Expertise and Support:CSPs have the expertise and experience to effectively manage and optimize latency, providing enterprises with peace of mind and freeing up internal resources.

Best Practices for Using CSPs to Optimize Latency

- Choose the Right CSP:Select a CSP that offers a comprehensive suite of latency optimization services and has a proven track record in delivering high-performance networks.

- Utilize CDN Services:Leverage CDN services to cache content closer to end-users, reducing latency and improving user experience.

- Implement Load Balancing:Use load balancing services to distribute traffic evenly across multiple servers, preventing latency spikes and ensuring optimal performance.

- Monitor Network Traffic:Regularly monitor network traffic to identify and resolve any bottlenecks that may contribute to latency.

- Work with CSP Support:Collaborate with CSP support teams to optimize network configurations and implement best practices for latency reduction.

Monitoring and Troubleshooting Latency Issues

Latency monitoring is critical in cloud networks to ensure optimal performance and user experience. By continuously measuring and analyzing latency, network administrators can proactively identify and resolve issues before they impact applications and services.

Tools and Techniques for Measuring Latency

Several tools and techniques are available for measuring latency in cloud networks, including:

- Ping: A simple command-line utility that sends packets to a target IP address and measures the round-trip time.

- Traceroute: A tool that traces the path of packets from a source to a destination, providing information about latency and hop-by-hop details.

- Network performance monitoring tools: Specialized software that provides comprehensive monitoring and analysis capabilities for cloud networks, including latency measurements.

Common Causes of Latency Problems

Common causes of latency problems in cloud networks include:

- Network congestion: Excessive traffic can cause packets to be delayed or dropped, resulting in increased latency.

- Slow or unreliable network components: Outdated or poorly configured network devices, such as routers and switches, can introduce latency.

- Long network paths: Data packets traveling long distances across multiple hops can experience significant latency.

- Cloud resource limitations: Insufficient CPU, memory, or storage resources in cloud instances can lead to latency issues.

- DNS resolution problems: Delays in resolving domain names to IP addresses can contribute to latency.

Troubleshooting Latency Problems

Troubleshooting latency problems involves the following steps:

- Identify the affected components: Use monitoring tools to identify the specific network components or cloud resources experiencing latency.

- Determine the root cause: Analyze latency measurements and network performance data to identify the underlying cause of the problem.

- Resolve the issue: Implement appropriate measures to address the root cause, such as optimizing network configurations, upgrading network components, or scaling cloud resources.

- Verify the resolution: After implementing the solution, re-measure latency to ensure the problem has been resolved.

Best Practices for Managing Latency

Best practices for managing latency in cloud networks include:

- Monitor latency continuously: Establish proactive monitoring systems to detect and resolve latency issues before they impact users.

- Optimize network configurations: Use techniques such as load balancing and traffic shaping to distribute traffic efficiently and reduce congestion.

- Use high-performance network components: Invest in reliable and high-speed network devices to minimize latency.

- Choose low-latency cloud regions: Select cloud regions that are geographically close to users to reduce network latency.

- Minimize network hops: Design network architectures to minimize the number of hops between source and destination.

Latency Optimization for Specific Cloud Applications

Minimizing latency in cloud networks is crucial for ensuring optimal performance of cloud applications. Different types of cloud applications have varying sensitivity to latency, and it’s essential to identify these applications and apply specific optimization techniques to enhance their performance.

Latency-Sensitive Cloud Applications

Applications that heavily rely on real-time interactions, such as video conferencing, online gaming, and financial trading platforms, are particularly susceptible to latency. These applications require low and consistent latency to ensure a seamless user experience.

Optimization Techniques

Optimizing latency for specific cloud applications involves a range of techniques, including:

- Selecting the Right Cloud Region:Choosing a cloud region that is geographically closer to the majority of users can significantly reduce latency.

- Using a Content Delivery Network (CDN):A CDN caches static content at multiple locations, reducing the distance data needs to travel to reach users.

- Optimizing Network Architecture:Designing a network architecture that minimizes the number of hops and utilizes high-performance network devices can improve latency.

- Implementing Load Balancing:Distributing traffic across multiple servers helps reduce latency by preventing any single server from becoming overloaded.

Case Studies

Numerous case studies demonstrate the successful application of latency optimization techniques for different application types:

- Video Conferencing:Zoom optimized its network architecture by implementing a multi-CDN approach, reducing latency by up to 30%.

- Online Gaming:Riot Games implemented a custom network infrastructure for its popular game League of Legends, reducing latency by 50%.

- Financial Trading:The New York Stock Exchange deployed a high-speed fiber optic network to reduce latency for its trading platform, improving execution times by milliseconds.

Cloud Networking Latency Optimization Tools

Optimizing latency in cloud networks requires a combination of tools and techniques. Here’s a list of valuable resources and tools available:

Monitoring and Analysis Tools

- CloudWatch (AWS): Monitors network performance metrics and provides insights into latency issues.

- Cloud Monitoring (GCP): Collects and analyzes performance data, including network latency.

- Azure Monitor (Azure): Monitors cloud resources and provides detailed network performance insights.

Network Performance Testing Tools

- iPerf3: Open-source tool for measuring network bandwidth and latency.

- ping: Standard network utility for testing latency and packet loss.

- Traceroute: Tool for tracing the path of packets through a network, identifying potential latency bottlenecks.

Network Optimization Tools

- Cloud CDN (AWS, GCP, Azure): Content Delivery Network (CDN) services that reduce latency for content delivery.

- Virtual Private Cloud (VPC): Virtual network environment within a cloud provider, allowing for optimized routing and network configuration.

- Traffic Steering: Mechanisms for directing network traffic based on performance metrics, such as latency.

Selecting the Right Tools, Cloud networking latency optimization

The choice of tools depends on the specific optimization needs and cloud environment. Consider the following factors:

- Monitoring requirements: Determine the level of network performance visibility and analysis needed.

- Network testing needs: Identify the specific latency metrics and network paths to be tested.

- Optimization objectives: Consider the desired latency reduction and performance improvements.

Best Practices for Cloud Networking Latency Optimization

Cloud networking latency optimization is crucial for enhancing the performance and user experience of cloud-based applications. By implementing best practices, organizations can effectively reduce latency and ensure optimal network performance. Here are some key recommendations and guidelines for latency optimization:

Network Architecture Optimization

*

-*Design a hierarchical network architecture

Implement a multi-tiered network with core, distribution, and access layers to minimize the number of hops and improve latency.

-

-*Use high-speed interconnects

Utilize high-bandwidth interconnects such as 10GbE or 40GbE to reduce latency between network devices.

-*Implement load balancing

Distribute traffic across multiple servers or network paths to avoid bottlenecks and improve latency.

Virtualization Optimization

*

-*Use efficient virtual machine (VM) placement

Place VMs on physical servers with sufficient resources and low latency to the network.

-

-*Optimize VM networking

Configure VM network settings to minimize overhead and improve latency, such as using jumbo frames or disabling unnecessary protocols.

-*Consider network virtualization overlays

Utilize network virtualization overlays such as VXLAN or NVGRE to reduce latency by bypassing the hypervisor network stack.

Application Optimization

*

-*Use latency-sensitive protocols

Employ protocols designed for low latency, such as UDP or QUIC, instead of TCP when possible.

-

-*Minimize application data transfer

Reduce the amount of data transferred between applications and the network by using compression or caching techniques.

-*Implement asynchronous communication

Use asynchronous communication patterns to avoid blocking operations and improve latency.

Monitoring and Troubleshooting

*

-*Establish latency baselines

Determine the acceptable latency levels for your applications and monitor latency metrics to identify performance deviations.

-

-*Use network monitoring tools

Utilize network monitoring tools to identify latency issues, such as packet loss, jitter, or high CPU utilization.

-*Perform regular latency testing

Conduct regular latency tests to identify potential bottlenecks and proactively address latency issues.

Additional Tips

*

-*Choose a reliable cloud provider

Select a cloud provider with a proven track record of low latency and high network performance.

-

-*Consider geo-distributed deployments

Deploy applications in multiple geographic regions to reduce latency for users in different locations.

-*Utilize CDN (Content Delivery Network)

Use CDN to cache content closer to users, reducing latency for accessing static resources.

By implementing these best practices, organizations can effectively optimize latency in cloud networks, enhancing the performance and user experience of cloud-based applications.

Emerging Trends in Cloud Networking Latency Optimization

The cloud networking landscape is constantly evolving, with new technologies and trends emerging that have the potential to significantly impact latency optimization. These trends are shaping the future of cloud networking and application performance, and organizations need to be aware of them in order to stay ahead of the curve.

One of the most important emerging trends is the adoption of software-defined networking (SDN). SDN decouples the network control plane from the data plane, which gives organizations greater flexibility and control over their networks. This can lead to significant latency reductions, as organizations can now program their networks to optimize traffic flow and reduce congestion.

Another emerging trend is the use of artificial intelligence (AI) and machine learning (ML) for latency optimization. AI and ML can be used to monitor network traffic patterns and identify potential bottlenecks. This information can then be used to automatically adjust network configurations and routing to reduce latency.

Multi-Cloud and Hybrid Cloud Environments

The increasing adoption of multi-cloud and hybrid cloud environments is also driving the need for new latency optimization techniques. In these environments, applications and data are distributed across multiple clouds and on-premises data centers. This can lead to increased latency if traffic is not routed optimally.

To address this challenge, organizations are adopting a variety of techniques, such as using cloud-based network optimization services, deploying virtual network appliances (VNAs), and implementing multi-path routing. These techniques can help to reduce latency and improve application performance in multi-cloud and hybrid cloud environments.

Edge Computing

Edge computing is another emerging trend that is having a significant impact on latency optimization. Edge computing brings computing and storage resources closer to the edge of the network, which can reduce latency for applications that require real-time data processing.

For example, edge computing can be used to deploy latency-sensitive applications, such as autonomous vehicles and augmented reality (AR) applications.

5G and Wi-Fi 6

The deployment of 5G and Wi-Fi 6 networks is also expected to have a major impact on latency optimization. These new technologies offer significantly faster speeds and lower latency than previous generations of wireless technology. This can lead to significant improvements in application performance, particularly for applications that require real-time data transfer.

Cloud Networking Latency Optimization for Mobile and IoT Applications

Optimizing latency for mobile and IoT applications poses unique challenges due to their inherent mobility, intermittent connectivity, and diverse device capabilities. To ensure seamless performance, specific techniques and strategies are required to minimize latency and enhance user experience.

Techniques for Latency Reduction

-

-*Network Segmentation

Divide the network into smaller, more manageable segments to reduce congestion and improve performance for latency-sensitive applications.

-*Traffic Prioritization

Assign higher priority to mobile and IoT traffic to ensure it receives preferential treatment and minimizes delays.

-*Caching and Edge Computing

Store frequently accessed data and applications closer to the edge of the network, reducing the distance data must travel and minimizing latency.

-*Content Delivery Networks (CDNs)

Distribute content across multiple servers geographically dispersed to reduce the distance between users and the content, resulting in faster delivery and lower latency.

-*DNS Optimization

Use optimized DNS servers and caching mechanisms to minimize the time it takes to resolve domain names and establish connections.

Benefits of Latency Optimization

-

-*Improved User Experience

Reduced latency enhances responsiveness, making applications feel faster and more intuitive.

-*Increased Productivity

Lower latency enables faster data transfer and processing, increasing efficiency and productivity for mobile and IoT applications.

-*Enhanced Reliability

Optimized latency reduces the likelihood of dropped connections or delayed responses, improving application reliability.

-*Cost Savings

By reducing latency, businesses can reduce bandwidth requirements and infrastructure costs.

-*Competitive Advantage

Providing low-latency applications can differentiate businesses from competitors and enhance customer satisfaction.

Cost Considerations in Cloud Networking Latency Optimization

Latency optimization in cloud networks can have cost implications that businesses must consider to make informed decisions. Understanding these costs and employing effective strategies can help organizations balance performance and financial constraints.

Factors influencing the cost of optimization efforts include:

Infrastructure Costs

- Hardware upgrades (e.g., high-performance routers, switches)

- Software licensing (e.g., network optimization tools, monitoring platforms)

- Network connectivity (e.g., dedicated leased lines, cloud interconnect services)

Operational Costs

- Maintenance and support (e.g., expert consulting, technical support)

- Monitoring and troubleshooting (e.g., network monitoring tools, performance analysis)

- Staff training and development (e.g., upskilling network engineers)

Performance Trade-offs

Optimizing for latency may involve trade-offs with other performance metrics:

- Throughput: Increasing latency optimization can potentially reduce network throughput.

- Reliability: Some optimization techniques may introduce additional complexity, potentially impacting reliability.

Strategies for balancing cost and performance considerations:

Prioritizing Latency-Sensitive Applications and Services

Identify and prioritize applications and services that are highly latency-sensitive and require optimization.

Optimizing Network Configurations and Routing Strategies

Implement network configurations and routing strategies that minimize latency, such as using low-latency routing protocols and optimizing path selection.

Utilizing Cloud-Based Latency Optimization Services

Leverage cloud-based services that provide latency optimization capabilities, such as cloud interconnect services and latency-aware load balancers.

Considering Long-Term Cost Implications

Consider the long-term cost implications of latency optimization efforts, including the potential impact on operational expenses and the return on investment.

Security Implications of Cloud Networking Latency Optimization

Latency optimization techniques can introduce potential security implications that need to be carefully considered and mitigated.Latency optimization techniques that involve reducing the number of network hops or using faster network protocols can make it easier for attackers to gain access to sensitive data or systems.

Additionally, latency optimization techniques that involve caching data or using content delivery networks (CDNs) can create new attack vectors for attackers to exploit.

Mitigating Security Risks

To mitigate the security risks associated with latency optimization, it is important to implement the following measures:

- Use strong encryption and authentication mechanisms to protect data in transit and at rest.

- Implement network security controls, such as firewalls and intrusion detection systems, to protect against unauthorized access to network resources.

- Regularly monitor network traffic for suspicious activity and take appropriate action to mitigate any threats.

- Educate users about the security risks associated with latency optimization and provide guidance on how to protect their data and systems.

By implementing these measures, organizations can mitigate the security risks associated with latency optimization and improve the overall security of their cloud networks.

Case Studies of Successful Cloud Networking Latency Optimization

Cloud networking latency optimization has gained significant traction in recent years, leading to tangible benefits for businesses across various industries. This section presents compelling case studies that showcase successful latency optimization implementations, highlighting the challenges faced, solutions deployed, and the remarkable results achieved.

Case Study: E-commerce Giant Enhances Customer Experience with Latency Reduction

A leading e-commerce company faced challenges with slow website loading times and frequent customer drop-offs during peak hours. By implementing a comprehensive latency optimization strategy that involved optimizing DNS settings, leveraging CDN services, and adopting a multi-cloud approach, the company achieved a significant reduction in page load times, resulting in improved customer satisfaction and increased conversion rates.

- Challenge:Slow website loading times and customer drop-offs during peak hours.

- Solution:Optimized DNS settings, leveraged CDN services, adopted a multi-cloud approach.

- Results:Significant reduction in page load times, improved customer satisfaction, increased conversion rates.

Case Study: Cloud Gaming Provider Achieves Seamless Gameplay with Low Latency

A cloud gaming provider aimed to deliver a lag-free gaming experience to its users. By employing techniques such as network traffic prioritization, load balancing, and latency monitoring, the provider was able to minimize latency and ensure a smooth and immersive gaming experience for its customers.

- Challenge:Delivering a lag-free gaming experience to users.

- Solution:Implemented network traffic prioritization, load balancing, and latency monitoring.

- Results:Minimized latency, ensured a smooth and immersive gaming experience for customers.

Future Directions in Cloud Networking Latency Optimization

The future of cloud networking latency optimization holds exciting possibilities as technological advancements continue to push the boundaries of performance.

Research Directions

Research efforts will focus on developing innovative techniques to minimize latency, such as:* Network Function Virtualization (NFV) and Software-Defined Networking (SDN):These technologies enable dynamic resource allocation and optimization, reducing latency by eliminating bottlenecks.

Artificial Intelligence (AI) and Machine Learning (ML)

AI/ML algorithms can analyze network traffic patterns and predict potential latency issues, allowing for proactive mitigation.

Technological Advancements

Advancements in hardware and software will also play a significant role in latency reduction:* High-Speed Interconnects:Faster interconnects, such as 400GbE and beyond, will enable data transfer at higher speeds, reducing latency.

Cloud-Native Applications

Applications designed specifically for cloud environments can leverage optimized networking protocols and minimize latency by reducing hops and unnecessary overhead.

Emerging Trends

Emerging trends will shape the evolution of latency optimization strategies:* Edge Computing:Processing data closer to end-users reduces latency by minimizing the distance data must travel.

5G and Beyond

Next-generation wireless technologies offer significantly lower latency, enabling real-time applications and IoT devices.

Multi-Cloud Architectures

Optimizing latency across multiple cloud providers requires advanced techniques and collaboration.

Ultimate Conclusion

Embark on this transformative journey towards latency optimization, and witness the remarkable impact it has on your cloud networks. Reduced latency translates to enhanced user satisfaction, seamless application performance, and a competitive edge in today’s fast-paced digital landscape.